To use zram the Linux kernel zsmalloc needs to be enabled. The zsmalloc functionality in turn allows to use two methods to access allocations of multiple pages: Copy-based or using VM mapping. Depending on platform one or the other is faster, and the configuration option already suggests that ARM the VM mapping method is typically faster. Hence I was wondering whether that is also true for ARM64 platforms (running in Aarch64 mode). Outcome: On a quad Cortex-A35 platform using Linux 4.14 VM mapping was ~20-50% faster.

iptable prevents nftables to be loaded

Since a while I am using nftables for my firewalling needs. My nftables.conf has some prerouting settings. After playing with docker, I had the issue that I was no longer able to reload my nftables:

/etc/nftables.conf:12:9-18: Error: Could not process rule: Device or resource busy

chain prerouting {

^^^^^^^^^^

Also disabling the Docker service did not help. It seems that the kernel module iptable_nat needs to be removed, but this is currently in use:

# rmmod iptable_nat rmmod: ERROR: Module iptable_nat is in use

There are some iptable rules/chains active which prevent the module from unloading. By clearing the iptable configuration, especially the nat table, it is possible to remove iptable_nat and then using nftables again.

iptables -F iptables -X iptables -t nat -F iptables -t nat -X iptables -t mangle -F iptables -t mangle -X

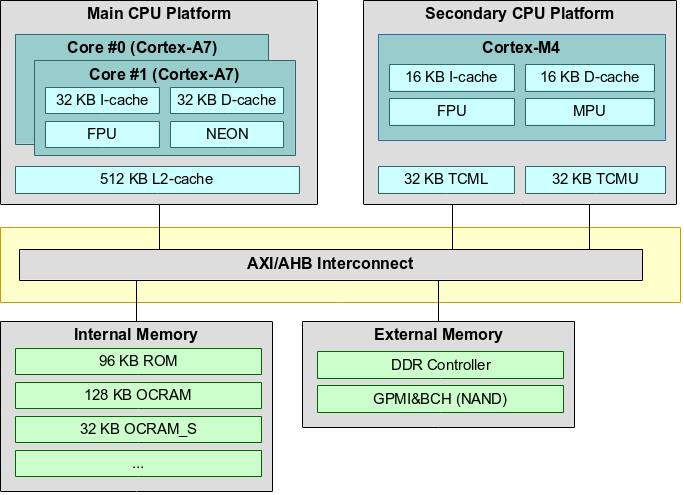

i.MX 7 Cortex-M4 memory locations and performance

The NXP i.MX 7 SoC heterogeneous architecture provides a secondary CPU platform with a Cortex-M4 core. This core can be used to run a firmware for custom tasks. The SoC has several options where the firmware can be located: There is a small portion of Tightly Coupled Memory (TCM) close to the Cortex-M4 core. A slightly larger amount of On-Chip SRAM (OCRAM) is available inside the SoC too. The Cortex-M4 core is also able to run from external DDR memory (through the MMDC) and QSPI. Furthermore, the Cortex-M4 uses a Modified Harvard Architecture, which has two independent buses and caches for Code (Code Bus) and Data (System Bus). The memory addressing is still unified, but accesses are split between the buses using addresses as discriminator (addresses in the range 0x00000000-0x1fffffff are loaded through the code bus, 0x20000000-0xdfffffff are accessed through the data bus).

Continue reading “i.MX 7 Cortex-M4 memory locations and performance”

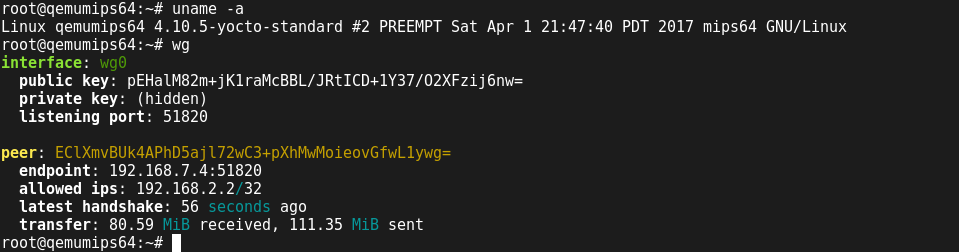

OpenEmbedded recipes for WireGuard VPN

This weekend I finally came around to create OpenEmbedded recipes for WireGuard. The recipe currently awaits review and hopefully will get part of the meta-networking layer, part of the meta-openembedded repository of the upstream OpenEmbedded project. There are two recipes, one for the kernel module and one for the user space tools. The user space tools have the kernel module as a dependency, hence it is sufficient to install the wireguard-tools package, e.g. by using IMAGE_INSTALL_append in your local.conf:

IMAGE_INSTALL_append = " wireguard-tools"

The kernel module needs at least a kernel version 3.18 or later and has some requirements regarding kernel configuration. The WireGuard website maintains a list of kernel requirements. If you are using the Yocto kernel, the netfilter kernel feature (features/netfilter/netfilter.scc) is enabled by default and seems to be sufficient to run WireGuard. To get started with WireGuard, refer to the excellent Quick Start guide on wireguard.io.

Using KVM with Qemu on ARM

This is part two of my blog post about Kernel-Virtual Machine (KVM) on a 32-Bit ARM architecture. The post is meant as a starting point for those who want to play with KVM and provide a useful collection of Qemu commands for virtualization.

Virtualization host setup

The Kernel configuration I used for my platforms Host kernel can be found here. Since I run my experiments on a Toradex Colibri iMX7D module, I started with the v4.1 configuration of the BSP kernel and updated that to v4.8 plus enabled KVM as well as KSM (Kernel same-page merging).

As root file system I use a slightly modified version of the Ångström distributions “development-image”, version 2015.12 (built from scratch with OpenEmbedded). Any recent ARM root file system should do it. I let Qemu v2.6.0 preinstall (by just adding “qemu” to the image and specifying ANGSTROM_QEMU_VERSION = “2.6.0” in conf/distro/angstrom-v2015.12.conf).

Virtualization guest setup

For the virtualization guest setup I was looking for something minimalistic. I uploaded the compiled binary of the Kernel (as tared zImage) and initramfs (as cpio.gz).

I built a custom kernel directly using v4.7 sources and a modified/stripped down version of the vexpress_defconfig (virt_guest_defconfig). I found it useful to look into Qemu’s “virt” machine setup code (hw/arm/virt.c) to understand what peripherals are actually emulated (and hence what drivers are actually required). Continue reading “Using KVM with Qemu on ARM”

Using the perf utility on ARM

Given two systems, both with a Cortex-A5 CPU, one clocked at 396MHz without L2 cache and one clocked at 500 MHz with 512kB L2 cache. How big is the impact of the L2 cache? Since the clock frequency is different, a simple CPU time comparison of a given program does not answer the question… I tried to answer this question using perf. perf is often used to profile software, but in this case it also proved to be useful to compare two different hardware implementations.

Most CPU’s nowadays have internal counters which count various events (e.g. executed instructions, cache misses, executed branches and branch misses etc…). Other hardware, e.g. cache controllers, might expose performance counters too, but this article focuses on the hardware counters exposed by the CPU. Continue reading “Using the perf utility on ARM”